YouTube Adds Disclosure Labels In Videos Created With Generative AI

To help combat misinformation with the rise of generative AI in content creation, YouTube is launching a content-labeling tool within Creator Studio aimed at fostering greater transparency and trust between creators and their audiences.

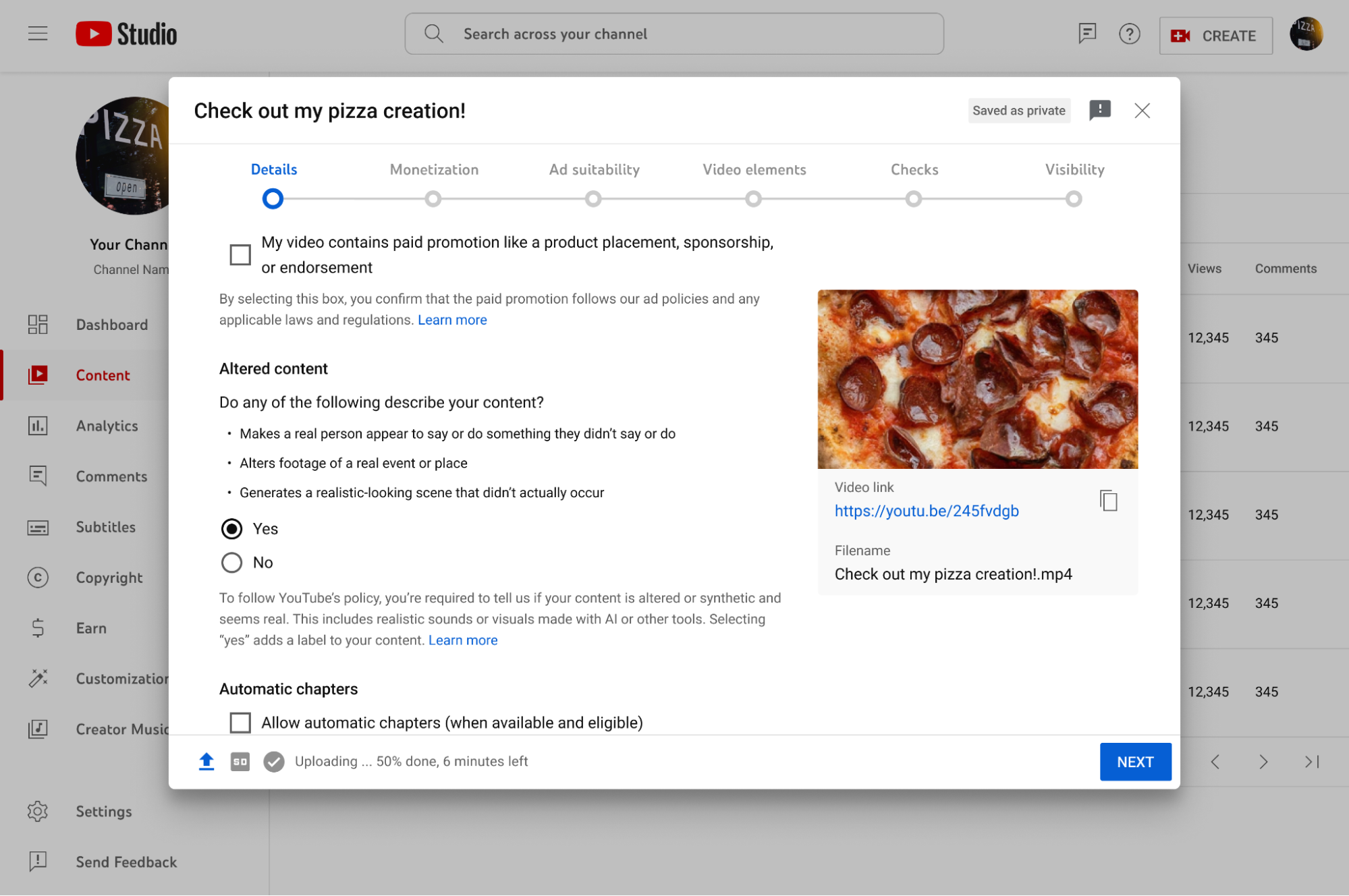

The new tool, integrated into Creator Studio, requires creators to disclose when realistic content, which viewers could easily mistake for real, is produced using altered or synthetic media, including generative AI.

Some examples of content that require disclosure include:

● Using the realistic likeness of a person: Digitally altering content to replace the face of one individual with another's or synthetically generating a person’s voice to narrate a video.

● Altering footage of real events or places: Making things look different from how they are or happened, such as making it appear as if a real building caught fire, or altering a real cityscape.

● Generating realistic scenes: Showing a realistic depiction of fictional major events, like a tornado moving toward a real town.

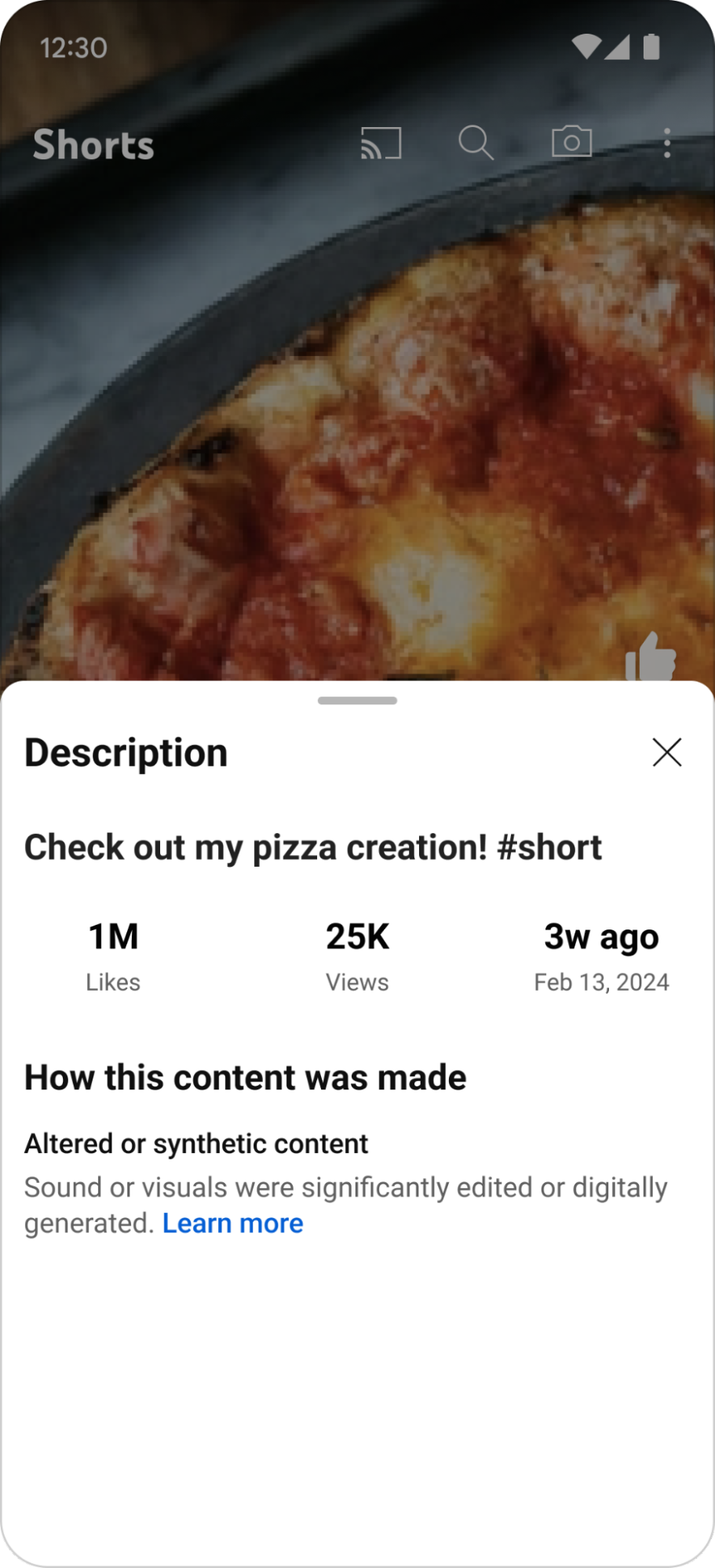

Labels indicating the use of altered or synthetic media will appear in both the expanded description and, for sensitive topics like health or news, prominently within the video player itself. These labels will gradually roll out across all YouTube platforms in the coming weeks, ensuring consistency and clarity for viewers.

Google and YouTube recognize the growing need for viewers to discern between altered or synthetic content and authentic material, and this initiative underscores the company’s commitment to responsible AI innovation, building upon the disclosure requirements and labels first announced in November.

However, Google also acknowledges that generative AI serves various legitimate purposes in the creative process, such as generating scripts or enhancing productivity. In these cases, disclosure is not required.

In collaboration with industry partners, Google remains committed to increasing transparency surrounding digital content. As a steering member of the Coalition for Content Provenance and Authenticity (C2PA), Google actively contributes to initiatives promoting trust and accountability in online content.

In addition to this, Google is working towards an updated privacy process to address requests for the removal of AI-generated or synthetic content that simulates identifiable individuals. This global initiative underscores Google's dedication to safeguarding user privacy and maintaining the integrity of its platforms.

More information about this story is available on Google’s official blog post, The Keyword.